Science in the time of COVID-19: Reflections on the UK Events Research Programme

Design

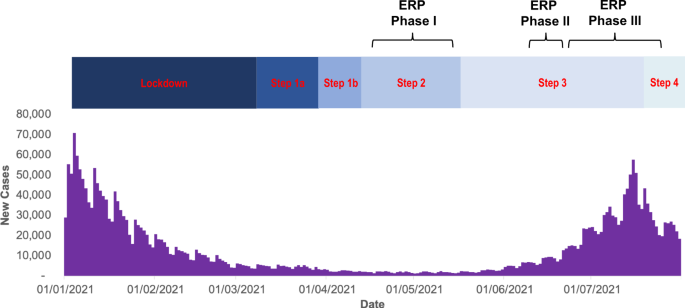

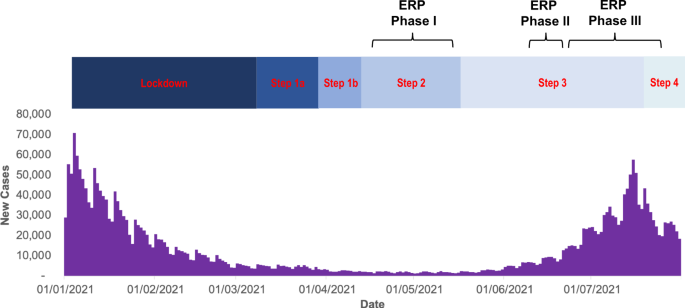

A range of study designs was identified in the Science Framework3 to optimise the ability to infer causality between events, mitigations and risk of transmission, including randomised controlled studies and meta-analyses across events. Despite drafting a protocol with sample size calculations for a large randomised controlled trial3, no such design was adopted, possibly due to perceived operational difficulties. The lack of suitable control groups, combined with low prevalence during the initial phases of the ERP (Fig. 1), relatively small crowds and the low rate of PCR testing (see later) made it difficult to estimate the risk of transmission during Phases I and II. The larger sizes of Phase III events combined with higher prevalence at that time and the adoption of the self-controlled case-series analysis4 provided the potential to draw stronger conclusions about transmission risk.

The programme attempted to cover a wide range of events and venues. The selection of events involved many different parties (the Science Board, public health bodies, local and central government and event organisers) and so did not just reflect scientific needs. Transparent, mainly design-based criteria for choosing events would have strengthened the programme and increased levels of understanding among attendees. It might also have increased confidence amongst the wider public and others in the scientific integrity of the ERP5.

Core measures

To facilitate the pooling of data across studies, the ERP aimed to collect a common set of standardised measures. The risk of transmission proved the most difficult. In Phases I and II, the risk of transmission was assessed by requiring participants to undertake two PCR tests, one within 48 h prior to attending and another 5 days after the event. A separate, recent (ideally 24 h before the event but sometimes up to 72 h) negative lateral flow device (LFD) test was required to gain entrance to the venue or a certificate of vaccination (Phases II and III). These tests had different purposes. The LFD was required to reduce the risk of transmission at events. The PCR test was for research purposes. However, this distinction was frequently lost on attendees and organisers, likely contributing to low rates of return of PCR tests, estimated at 15% (7764/51,319) across events in Phase I, ranging from 3 to 61%6. This reflected several problems. First, poorly integrated testing and ticketing systems (e.g. the need to order each of three required tests separately through the national system). Second, ineffective communication of requirements for participation in the ERP. While tickets were only issued in Phase I events to those who had signed a consent form, there was insufficient time to evaluate how well those signing the consent form understood what was required of them. The Science Board requested that incentives were offered for test returns, given higher PCR return rates observed in Phase I when these were offered6. There was, however, insufficient time to resolve what transpired to be unfounded legal concerns about the use of incentives.

These problems in assessing the risk of transmission using multiple PCR tests in all participants were circumvented by the adoption of a self-controlled case-series design in Phase III4. This design could assess the risk of transmission from attending events without requiring pre and post-event testing in all participants. The method relied solely on routine PCR testing run through the national surveillance and contact tracing system, NHS Test and Trace. Ethics approval was granted on the understanding that while written consent was not required, event organisers would ensure that all participants received written information that attendance was conditional upon participating in the ERP. For one event—a European football tournament—many tickets were sold prior to the ERP, and the event organisers were unwilling or unable to inform ticket holders that their attendance was conditional upon participation in the study. For two other events in Phase III, written consent was sought by the event organisers despite it not being a condition of ethics approval. For one event, fewer than 5% signed consent forms which blocked receipt of data from 95% of over 300,000 attendees at the multi-day event. For the other event, also multi-day, 17% of around 350,000 attendees signed consent forms, again blocking receipt of their data. The study power was significantly reduced by these restrictions and other – as yet – unfathomed reasons preventing access to data for some of the most well-attended events.

The need to share and link data—with appropriate regulatory oversight—is one of the ten lessons from the pandemic highlighted in a report from the Office for Statistical Regulation (OSR)7.

Ethics

Ethical considerations guiding the Science Board drew upon the report of an international working group, chaired by one of us (MP), on conducting research in global health emergencies8. This highlighted the importance in such contexts of generating high-quality evidence to contribute to reducing harm, treating everyone involved with equal moral respect, and effective engagement.

The Science Board’s strong advice was that the event organiser should obtain written, informed consent from participants to provide some reassurance that they understood that events were part of the ERP, attending likely involved some additional risk over non-attendance and that the success of the ERP in generating useful knowledge required that they undertake pre- and post-event tests (in Phases I and II) and return the results to the programme. Written consent was required as part of research ethics committee approvals for studies of the risk of transmission in Phases I and II but not for Phase III, for which event organisers were required only to inform ticket holders that attendance involved research participation. Failure to meet this requirement in practice resulted in a loss of access to much data, as described above in Core Measures. Partly in response to this, at the Science Board’s recommendation, a Data Monitoring and Ethics Committee was set up In Phase III, independent of the Science Board, to receive and investigate any safety concerns about events included in the ERP9.

Open science

Open Science principles10—the norm for most health and medical research—are not yet the norm for policy evaluation. Making explicit these principles to guide the work of the ERP was, therefore, unusual but consistent with the use of open science principles advocated by SAGE11, with the potential to strengthen the evidence generated. They were not always adhered to. While protocols for all studies were published before data collection was completed, only some of those in Phase I were published before data collection had started. In part, this reflected the extremely challenging timelines that research teams were working to, with data collection starting before some protocols were finalised. Once completed, publication of Phase I findings was delayed by being processed as a policy document—requiring ministerial approval for publication—rather than a scientific document. Clarification from the Government Office of Science removed this obstacle to the timely publication of Phase II and III results.

Against the standards set by the Code of Practice for Statistics by the Office for Statistical Regulation (OSR), data were, on occasion, released early without accompanying information to allow their quality, including their interpretation, to be judged. More broadly, Open Science is about informing the public of what is being done on their behalf as a basis for them to judge its trustworthiness, core to fostering trust12. Following the ten lessons from the pandemic set out by the OSR will avoid this and should promote the appropriate use of statistics for the public good in a way that promotes public confidence7.